How we ended up with OpenAPI

This is a story about how we transformed the product development process from the traditional Code First approach in building API, generating the API from the code, to the Design First method, so the code is built from a document, based on the OpenAPI 3.0 Schema.

TL;DR

For a long time, we were working with an unstructured WebSocket API of our design, but realized it was impossible to keep it that way and switched to the OpenAPI 3.0 (ex Swagger) Specification. During the migration, it became clear that a crucial part of this migration was moving the API Schema to a separate repository. The gist of the changes was not to get a machine-readable description of the API, but to introduce a software-validated and encoded format of the technical specification at the product design stage.

Experiment with a single data tree

6 years ago our video streaming software lived with an admin panel in AngularJS, chaotically downloading the settings and runtime data from the server, somehow pasting and displaying them. Since our AngularJS code quickly turned into a mess, we started to plan the migration to React. We decided to test the concept of a rendering tree structure in React.

There was no widespread use of Redux yet, and even today we prefer to avoid (stay away from) it.

The idea is quite simple: React is based on a fixed data state. In React, it is possible to distinct components that encapsulate different behavior, such as receiving the data and displaying it and moving the data up the component tree. Developing the idea, we can make a root the only point of the data update. It contains a component that receives the data from the server and displays it. The data comes as a single tree and is then rendered as a whole.

The results of the first experiment showed that updating the streams’ data in the Admin UI once per second (to display the current values of bit rate, number of clients, etc.) requires traffic up to 50 Mbps, which is madness. However, we decided not to abandon this idea but to work out the kinks.

This is how the delta protocol was born, which we used for a long time.

The main point of the approach is as follows: to return the information about the modification of an object, it is enough to send a partial object with modified fields only. If it is a nested object, mini-delta objects with partial hierarchy are sent. Sounds easy, especially when we moved from arrays to objects in data transferring.

For example, we changed the collection of streams from an array to an object where the stream names are the keys. It is a piece of cake when the data has natural primary keys but challenging when there are none. Also, we decided to update the array-like data entirely without any partial updates.

The server sends the delta changes, or a change made to the initial data, to the admin panel over a WebSocket connection, thus, giving a client a clear picture.

The same delta protocol is used to transmit the updated data from the Admin UI to the server, i. e. only the modified data is sent. A data tree allows you to make a single request for a large number of various changes.

Let’s have a look at another case. While one user might be editing the configuration in the Admin UI, another may already have saved the same changes. So when the first is about to submit the changes, the Save button may disappear, and the proposed changes will be lost. This scenario is implemented easily with the help of two functions of the delta protocol: apply changes and calculate changes.

Although, when there are 1 000 or more streams on the server, the amount of data, dumped into the admin panel, becomes excessive and, consequently, consumes lots of CPU resources. It was a long-standing issue of Flussonic Admin UI that customers complained about and it was complicated to solve with that approach.

But more importantly, we missed the opportunity to design a consistent and standardized public API for our clients. We will discuss it in further detail below.

How to design an HTTP API in 2021

Previously, we built not an API but rather a protocol, maintaining the internal state of our system with both sides: the server and the Admin UI. Now we are moving towards an API.

Choosing the direction of further development, we raised the following questions:

- Are there any generally accepted standards on how to make HTTP requests to read and modify the data?

- Are there any generally accepted specifications to describe such a standard as WSDL for SOAP or a gRPC?

If there is only one answer to the second question today — OpenAPI 3, yet the first question was left uncertain.

Set of conventions for the HTTP API (REST)

First of all, let’s see what REST API is. Usually, it stands for Pragmatic Minimal HTTP API, but it is overkill. The term REST is used to distinguish it from the SOAP API (the communication between a web server and a client is done through XML files with encrypted commands and responses over one HTTP URL).

Also, REST does not equal JSON API, which differs from SOAP only by replacing XML with JSON.

Most REST APIs used in the field today are not “truly REST” from a purist point of view.

Though here are some best practices for an HTTP API development that we discovered:

- Use different HTTP URLs to point to different objects. HTTP URL is way better than the SOAP endpoint.

- Use different HTTP methods to distinguish the requests for data reading and modifying.

- Use HTTP response codes to get the best from the HTTP protocol and its functionalities for the API itself.

- Use JSON as a format to send and receive the data. Previously, it was XML, that, together with XSLT in the browser, allowed you to transform the XML into HTML. There was little practical sense in it, so it went behind the times.

After a decade of HTTP and JSON development, there still was no agreement on the standardization of requesting and editing an object stored on another server. REST in its original form was an attempt to bring this idea to life, but it did not stick.

So we went for something similar to the Rails practices:

- Use simple names (plural nouns) to access collections: GET /sessions

- Use logical nesting on an endpoint to access an object from a collection: GET(PUT, DELETE) /sessions/1 (where “1” is a session ID)

- Use a query string for filtering and sorting collections, but with some restrictions. If more functionality will be needed, we plan to do a search via POST request and a JSON query language in the style of the MongoDB query language (though due to some reasons we most likely won’t).

A more detailed description of what options we considered for HTTP filtering and sorting will be discussed in a separate article.

API first or not?

Spoiler: yes.

Schema from code

Now let’s delve into the details of this approach. For a long time, we did not quite understand the sense of keeping and maintaining the schemes and contracts in a separate repository (one more repository) because this is such a pain in the neck as you have to edit it here and there all the time.

As long as one or two people are responsible for the project and its API to thrive and prosper, writing API specifications before the code and storing it in a separate repository seems to be an unnecessary and time-consuming deal.

It might seem just a matter of the developer’s team preference (like choosing a programming language or setting a number of spaces in the indentation) choosing whether to generate the schema from code or vice versa: the code from the schema. Nevertheless, it is a critical misconception.

Writing code and then generating a schema from it makes no sense. Why is that pointless? A developer will structure a program based on the idea that comes to their mind. So the schema will simply reflect that. As a result, there are no guarantees that this schema will not undergo a 180-degree turn.

Code First also leads to the API design by the programmer himself. That is, the task to design the API is assigned to them orally and postponed till the coding is ready. Code might also be written by different people, which results in the poor quality of the API. API design is put aside until the last minute (for another six months after all missed deadlines).

What do we get at the end of the day? Product knowledge is expressed in the code rather than formal description. Documenting such an API is painful. Any technical writer, trying to find some fragments of the request and running around, seeking a list of the possible values of the fields will tell you this. Customers who regularly receive something they did not expect from the API will also have something to say about it.

From an SDLC (Software Development Life Cycle) point of view, when generating a schema from code, the design stage is rushed, and the person that designs the API has no way to create something valid.

When recreating a schema from code, inevitably comes the task of looking through the entire code and retrieving all the possible fields and error states for the returned data. From our experience, it can take up to several months with about half a billion lines of code.

API-first

Everything changes when the API schema is edited first.

Firstly, we get an opportunity to focus on the design of a nice and convenient API for the product without the burden of thousands of lines of code working with each field. At this stage, you should think for months and years ahead, and there is time and opportunity for that.

The opportunity to work calmly, without delving into the details of the API implementation, at a level that does not allow digging there (we cannot write any code yet) makes it possible to carry out all the work thoroughly and with concentration.

Secondly, a separate schema repository is crucial. It is way easier to notice any changes and modifications there, track and establish processes that require review from colleagues. The contract on how the product works is now stored explicitly, and the code follows it instead. Contract changes are more structured, understandable, and predictable.

The front-end developer will no longer go mad about the changes from the backend without forewarning first. The front-end developers can participate in this modification process themselves.

A product manager can write system requirements in a way that is suitable for programmers and programs like Postman. A front-end developer can start working on the front end as soon as the schema is modified, without waiting for the backend. So as technical writers. They can also write examples and documentation there, without waiting for the backend.

Additionally, you can create an SDK and offer it to customers. You can live almost as comfortably as 20 years ago with Corba, only without the binary hell that was there. Or almost as convenient as with gRPC, but keeping the standard HTTP.

OpenAPI first

What is a primary (number one) point of the transition from the schema generation (or life without it, these are close states) to the generation of code according to the schema? We now have a set of tools for the API contract design. The developer now has clear and precise acceptance criteria for his assignment. If it is clear how to design a button on the screen with this data - the work is done. If not - it is not done. Colleagues can join at this stage and discuss some exact merge requests in Gitlab with specific questions, already starting to test and experiment with something at the task formulation stage. Thanks to the fact that the contracted API schema (as opposed to the test formulation of the problem) is itself a software tool, all that is now possible.

Design

The contracted project becomes an agreement around which all the code is built. It’s easier for programmers to go towards the aim without drowning in excessive design.

It is crucial to distinguish a simple text formulation of the project and a contracted one. What is a novel? It is a text that is subject to interpretation. A novel, as a rule, can be intricate at the beginning and wrinkled at the end, just like the Game of Thrones. This text is written by people who are not particularly responsible for the implementation for other people, they know in advance they will have to think about it in the process and especially at the end.

The API First approach allows you to set the limits and the acceptance criteria for the job. The schema is either ready or not. It is way easier to verify the validity and integrity of the schema than review the code and restore all possible response fields and their values from it.

We also followed the principle: “months of coding saves hours of design development”, but decided to take a chance and try the other way around.

Parallel jobs

You have probably already guessed that a technical writer can start documenting the schema while it is still under construction, i. e. before the first line of code is being written, and start giving feedback on how awesome it is or vice versa.

When can a tester start work? That’s right, from the first lines of the schema, because now they have a contract (even if it is partial). It’s a lot easier to interact with colleagues, because : “I pressed something and everything broke.” is now improved to: “I am sending a valid request, and receive something not indicated in the specification.”

Swagger parser

The first tool you need when working with a schema is @apidevtools/swagger-parser. All other tools fall short, to put it mildly. Without this tool, you won’t even parse the least complex schema.

Validators

A linter in the repository with schemas is a must-have. If there’s no linter, nothing can be merged. All child project restrictions (your Erlang, Rust, Go, Swift code) must be reflected in the rules of a linter.

openapi-ibm-validator is good and helpful. Speccy and Spectral seem to be poorer without swagger-parser.

Postman

Try to get used to Postman, it’s good, although there are questions regarding its native support for OpenAPI 3.

We have now written our own code generator for Erlang and will most likely bring it to open source status.

Mock server

Frontend developers will appreciate the usage of spec-based mock servers while the backend arrives with its rapid development frameworks.

There is a Prism, but we did not like it as it is too private and does not suit us. Therefore, we had to make our own based on openapi-sampler and simple express.

OpenAPI OperationId

While implementing OpenAPI, a set of questions arose from the colleagues, especially looking back at established habits. OpenAPI has a concept like operationId and it is indeed something quite fabulous, but the question is: “Why is it necessary if there is a standard manual URL generation nearby?”

OpenAPI describes a data transmission over HTTP protocol, so it is quite logical to use the usual URLs as we put so much effort into selecting their names and parameter names precisely and elegantly, but these operationIds coexist with the URLs.

One question may arise: Why do we need these two at the same time and why operationId when there are clear and convenient URLs?

apiStreamer.init(`/streams/${name}`, 'put', null, dataForUpdate)

apiStreamer.init(`/streams/${mediaName}`, 'get')

and

client.chassis_interface_save({name}, dataForUpdate)

What is the difference between the two? One uses URLs in the code, the other — OpenAPI operationId.

OpenAPI provides more than a list of URLs and parameter validation. It allows describing a full-blown RPC (Remote Procedure Call) based on a fairly understandable, readable, and familiar HTTP.

Why is the operationId approach long-life and reliable?

- Transforming operationId into functions is easier to maintain and is human-readable. In the example of pasting the URLs by hand, not only is there an error (name can contain

/), but an additional effort must be made to track the correspondence of the URL to the method with your own eyes.

grep can be used towards operationId. You are able to find all the API calls, but you just won’t be able to do the same with the hand-made URLs: /streams/${urlescape(name)>/inputs/selected_input.index} - how to write such a thing for a regex?- OperationId offers you an opportunity to retrieve and read a full list of functions, which can also come in handy.

But these were all little things. The essential thing is that, in any case, all the API calls end up wrapped in intermediate functions, which know what URL to use and how to configure it. Considering that OpenAPI describes the input and output parameters very precisely, it makes sense not to waste time writing these things, but rather to hand this job over to the bot to generate such code and fit your own code internally to the data model and control model that interacts with this HTTP RPC.

What’s Next?

We have about 25 000 thousand lines of schema code, and it is without examples or descriptions. We will create an environment for documentation from it to share objects and their descriptions between the projects. There will also be examples that will be used to test the mock servers. Stay tuned!

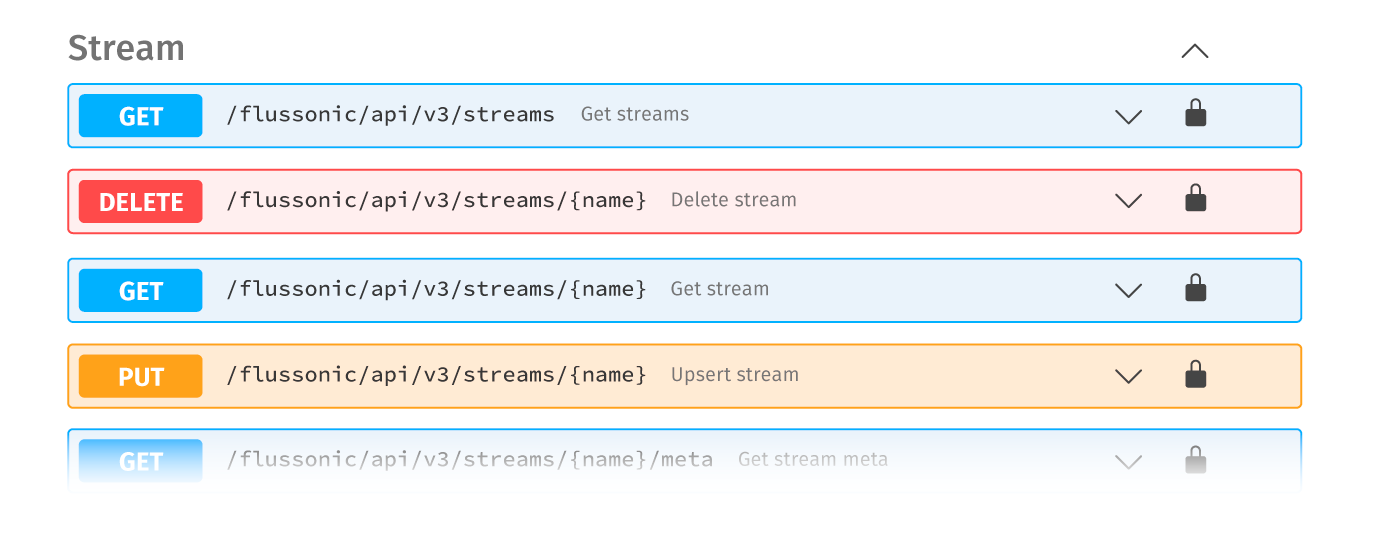

Go to the Flussonic HTTP API