The Evolution of Debian Package Automation

Our Flussonic video streaming server is distributed as a debian package and installed by our customers on their servers.

It has passed a long way of devopsification of package creation and the process is still going on. Here I want to share how we have evolved from creating packages by hand to a fully automated multi-branch repository created from several repositories.

The very beginning: handmade packages

Years ago, Flussonic was living in a single repository with its frontend, and the Debian package was created by the commands:

make deb scp *.deb repo@repo.myserver.com:~/ ssh repo@repo.myserver.com ./update-debian.sh

Such an approach was normal for a start. Any build server (especially in late 2000s) is not easy to maintain. If you configure it properly and do not touch for a couple of years, you will forget everything about it when it breaks. So it is really very convenient if you have everything to build an installable package from your sources.

What is inside this make deb There is an https://github.com/flussonic/epm: erlang package manager. Tool was rewritten from excellent https://github.com/jordansissel/fpm

We write in erlang and do not want to install ruby to make a package, it was very important in pre-Docker era. This make deb was launched on MacOS X. So, as a Mac developer I make a package there and immediately install tool on Debian.

But what about RPM? People use it too. A big customer of ours forced us to build rpm packages for it and I became a (possibly) second man on Earth who has implemented rpm package writer. It is a very traumatic experience and I hope I will once forget it, because rpm is the most undesigned and brain-damaging thing. Why I just haven’t compiled rpm for MacOS? Because it was even more complicated task than to implement rpm writer.

It was the easy time when it was possible to run all tests before pushing commit and they all were green.

So what we had several years ago:

-

Build server is (was) hard to maintain with small team, when no dedicated engineer is responsible for it

-

It is possible to compile erlang to bytecode from Mac to Debian

-

We had a simple erlang tool to create the package by hands

-

Releases are rare and uploading by hand is OK

-

RPM is possible but never try to repeat this experience. Use alien

Nightly build automation

Our support team was growing together with user base and was giving intermediate builds to customers more often. They got tired from the monotone process of building the package and uploading it themselves. At that time we moved to self-hosted gitlab and decided to try its CI mechanism:

`stages:

- build

- upload

build:

stage: build

script:

- make deb

upload:

stage: upload

script:

- scp *.deb repo@repo.myserver.com:~/master/

- ssh repo@repo.myserver.com “./update-debian.sh”

`

The easiest step was to setup package building and uploading it to another repository from CI runner. Now we had two repositories: one for releases (still made and uploaded manually) and the other for packages built from master branch.

We hadn’t adopted Docker yet, so it was required to properly configure the runner machine for this task. However, first step was done: the master branch was packaged by a robot at that step, making “nightly builds” several times per day.

Multi-branch packaging

As our development team was also growing bigger, some practices were changed and git branches were used more and more often. Support team started deploying packages from branches to customers during integration or support process.

A new problem appeared: we decided to create per-branch repositories to install the flussonic package from a git branch.

Why is it complicated? Because we haven’t discussed Flussonic dependencies. We have our own package for erlang, python, something else, and all these packages have to be pushed to each repository that we want to distribute.

So, when we try to build a package from a new branch, we need somehow to put all dependencies into this new branch.

Here we got two problems:

Dependencies packaging

Flussonic dependencies change very rarely. It takes us several months to test and migrate to a new version of erlang. All libraries like Cowboy (web server) are melded inside Flussonic repository and are considered as a part of main package, so here we speak only about erlang, python, caffe, etc.

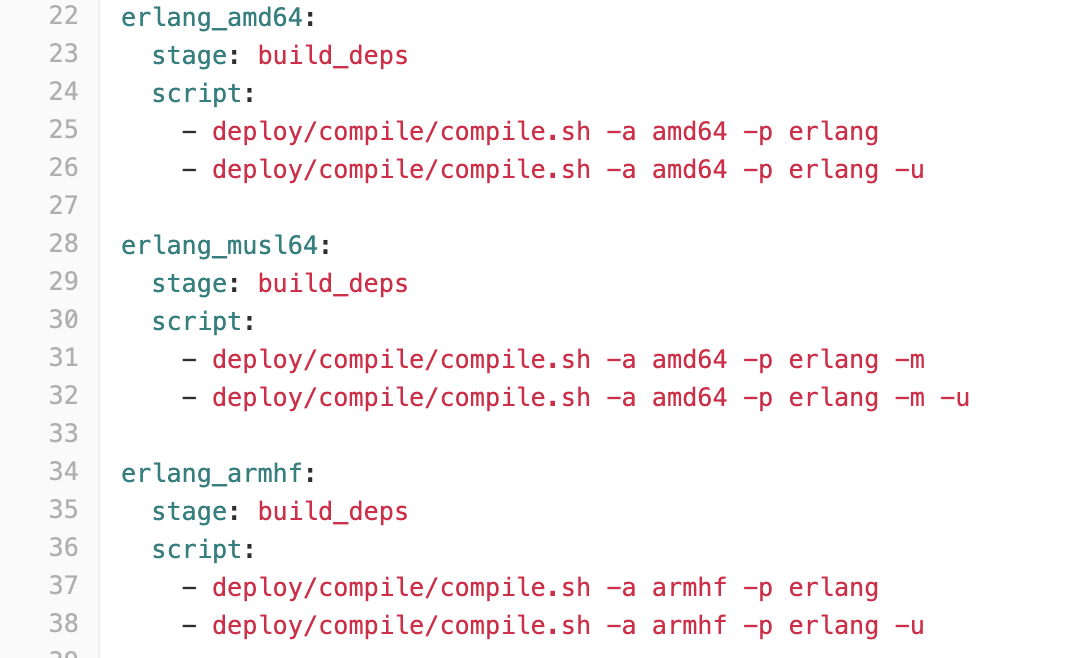

During the development of dependency maintaining process we have moved to Docker and to cross-compilation. Now we have 5 architectures: amd64, arm64, musl64 (alpine on amd64), armhf and e2k (Elbrus processor).

All dependencies (totally about 20 packages+their architectures) are built in Docker ecosystem. We have Dockerfile for each of them and use docker build. This is very important, because docker run always run all commands, while docker build can aggressively cache every line in Dockerfile.

Always try to use docker build when possible! Take care at command order because it can kill your cache when it is not required. For example:

FROM ubuntu:18.04 COPY tools tools/ RUN tools/fetch-prerequisites.sh

This is not a good code, because if you just change a single line in any file in tools, you will download everything again. Here is a better code:

FROM ubuntu:18.04 COPY tools/fetch-prerequisites.sh tools/fetch-prerequisites.sh RUN tools/fetch-prerequisites.sh COPY tools tools/

It is better but it would be even better not to write fetch-prerequisites.sh code at all:

FROM ubuntu:18.04 RUN wget -q -O - https://erlang.org/downloads/erlang-22.2.tgz COPY tools tools/

You do not need to reduce Dockerfile steps too much, because small granularity will help you to get good cache/miss ratio during build process.

Why is it important? When I take my 32 core AMD Threadripper to rebuild all our dependencies, it takes around 2 hours to rebuild all of them. With proper caching it takes about 3 seconds and about 3 seconds to “upload” them. With such optimization we can virtually build dependencies on eachcommit.

There is an alternative approach: build them once, upload them to Docker Registry as a tagged image and take them from this image. This approach is also good, but we do not use it because:

we have a source code as a single source of truth and we maintain rebuildability of prerequisites

Docker Registry doesn’t have a good atomic mechanism for “pull or build”. It is not easy to write code like: docker pull || (docker build && docker push)

I have mentioned that it took 3 seconds to upload 1 GB of dependencies. How is it possible? We have implemented a tricky conditional upload mechanism.

Uploading done right

The problem is: you have a file that weights hundreds of megabytes and you are 99% sure that this file already exists somewhere nearby on upload target server. Why? Because you have just made a branch and you are uploading a package to separate branch folder. Dependency packages almost never change (very rarely), so you can be quite sure that just nearby in master/ folder you have the same erlang package and you can just link it to new folder.

We have put a special upload script that can handle GET request before uploading with X-Sha1 header. If there is no file with such name in required folder, this script will look for file with this sha1 in nearby folders. SHA1 of files are of course written on disk in erlang_22.4_amd64.deb.sha1 files.

If such a file exists nearby, it is linked to the new folder. It takes several milliseconds, so uploading of all dependencies usually takes no more than several seconds.

An important moment: it is good to upload dependencies directly from the Docker image that was built in the previous step.

Our upload tool also sends X-Md5, X-Sha256 headers: everything thas is required for creating index for Debian repository. It is important because when server script rebuilds repository, it doesn’t recalculate the checksum of content, but just takes it from cached files.

Package validation

Usually tests are running in sources. It means that after we have built a package, we can break something, so the package also has to be tested.

We have added it to Gitlab CI pipeline. Right after building+uploading all dependencies and building a flussonic package, we launch the Docker image and install our new package. Now we can run a set of quick acceptance tests, which are run rather trivially: we configure Flussonic with a real license, give it a real config file with real file source and run checks via HTTP.

So we can be absolutely sure that our package is not broken during package creation.

Multiproject packaging

As our team continued growing, we extracted the Flussonic Watcher project out of the Flussonic git repository. Flussonic Watcher is our product for video surveillance. It is a python backend + React frontend. They live now in two different repositories that are periodically merged in a single package flussonic-watcher_vsn_amd64.deb

They also have branches and their own versioning. How can we combine two projects together in a single repo? Why is it important? Because we have one support team and we give a single repository to our customers.

How can we do so that when a Watcher team member creates his own branch, the corresponding folder on repository server will have also Flussonic and Flussonic dependencies?

The trick is very simple: when a new branch is created and some package is uploaded to repository, our server script links all latest packages from the master/ folder to this new branch folder. So flussonic always has flussonic-watcher and flussonic watcher always has flussonic in the branch folder.

TL;DR

- we were building and uploading debian packages by hands

- then we have moved to automated building and uploading them

- migrated to building packages inside Docker images (not containers)

- then we moved flussonic dependencies to Docker images

- implemented virtual (caching) upload mechanism

- created a repository handling script that maintains per-branch repository

- combine several projects in a single repository server

- install and validate the package before uploading

What next?

Right now we need to solve a problem with branch folder deletion. When Gitlab deletes a branch (on merge request acceptance), no hook is called. We cannot delete a folder, so we need to run a cron script that deletes empty folders.

I do not like cron scripts because someone must remember about them. It would be good to integrate Gitlab and Debian repository, but we have no solution here yet.