TWCC — Server-Side Implementation of Adaptive WebRTC Protocol for Large Scale Simulcast Projects

Real Time Communication protocols, and WebRTC in particular, are widely used in video streaming projects where ultra-low latency is required. Examples of such projects include video calls, telemedicine, distant education, game streaming, video blogging and many more. WebRTC is a family of protocols developed for real time transmission of encrypted video between two peers. WebRTC uses transport level protocols UDP and TCP, and it uses RTP/RTCP protocol for packaging media data. WebRTC has embedded transport control mechanisms like RR/SR, NACK, PLI, FIR, FEC, and REMB; also, it allows to add extensions to better tune data transmission and collect information.

This article describles how Flussonic Media Server uses above mentioned mechanisms and extentions to deliver low-latency video with adaptive bitrate.

Table of Contents:

The problem we tried to solve, and how our clients could benefit from our solution

When transmitting video streams, the network conditions could change over time, and the network throughput might increase or decrease. WebRTC protocol has embedded peer-to-peer mechanisms which the receiver could signal the transmitter that the video is not coming through and it needs to reduce the video bitrate. Alternatively, the receiving party could send a signal to the source that the channel bandwith has improved and the transmitter may encode the video with a higher bitrate.

In simulcast projects, where the transmission goes from one to many, it is impossible to encode video individually for each viewer. The system needs to switch between video renditions with pre-defined quality that was configured on the transcoder before the transmission. We have developed a solution that uses peer-to-peer mechanisms for client-server interactions and adaptive changing of the video stream bitrate.

With our solution, an unlimited number of viewers can get access to video streams while being connected to different networks and with different channel characteristics that could change at any moment. There is no delay in start and there is no buffering when playing the video, and the video is being delivered with ultra low-latency.

What tools the protocol gives to the developers

There are many algorithms for estimating the network load and the current available bandwith. Those algorithms measure the channel latency and the number of dropped packets, and based on that calculate the total possible flow between two nodes.

Our first approach

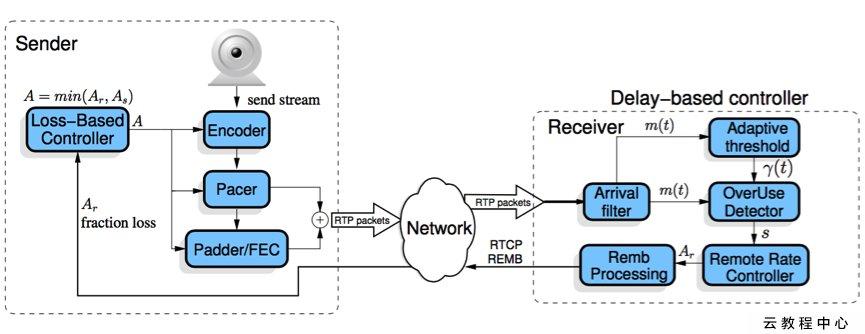

Our initial approach to measure the available bandwith was to use the built-in in the browser GCC (Google Congestion Coltrol) mechanism. The browser on the receiving side calculates the RTP packets’ statistics that includes the number of lost packets. Then, the receiver estimates the time delay between sequential packets, and calculates the REMB value (Receiver Estimated Maximum Bitrate).

The receiver would generate RR (Receiver Report) RTCP packets with the REMB and lost packets value and send it to the transmitter side. The transmitter then receives RR RTCP data and adjusts the bitrate of the video stream for this client.

Current implementation

Our current approach for one-to-many WebRTC ABR transmissions uses an RTP extension called TWCC (Transport Wide Congestion Control).

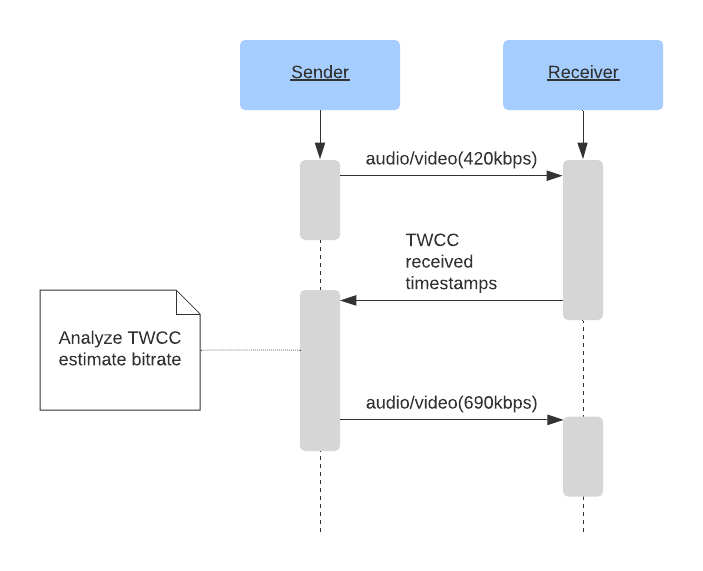

Here, the receiving side would report back the arrival time of each packet by using a special RTCP message. The tranmission side uses the GCC algorithm or its alternative to measure the delays between the packets arrival; also, it identifies late or missing packets.

When this auxilary data exchange is being done frequently enough, the transmission side could quickly adapt to the changes in the network conditions and adjust the characteristics of the video stream.

We believe that the algorithm that uses TWCC is the best choice because it provides the transmission side with a large set of data needed to estimate the Internet channel quality:

- almost instant information about lost packets, including individual packets;

- precise egress bitrate;

- precise ingress bitrate;

- jitter value;

- the delay between sending and receiving of packets;

- the description on how the network deals with static or wave-like bandwidth value.

In our initial approach, this data was not available to the server and was hidden in the browser implementation of GCC algorithm. The advantage of our latest approach is that the control logic and video stream management is on the transmission side. This makes the development process more streamlined, and allows us to implement new rate control algorithms on the server side without worrying about updating anything in the client.

How our implementation is different from the standard scheme “browser-to-browser”

A browser implementation could use different varieties of GCC algorithms to control the encoder, ensuring that the video bitrate matches the available network bandwidth at every moment of time. In peer-to-peer video streaming sessions, browsers could gradually change the bitrates of their encoders with very small steps, for example by 50Kbps per iteration.

When delivering ABR video from a media server there is only a fixed set of profiles - resolutions and bitrates. The media server encoder generates all predefined profiles at the same time. At any moment, the system could the switch between fixed bitrates (video tracks) for a particular viewer whose network conditions changed for better or worse. The difference in bitrates between the tracks could be relatively big. To make a decision to switch tracks upwards the system needs to ensure that the next track’s video bitrate will match the current available bandwidth.

When we tried to use REMB implementation of the bandwidth estimation mechanism we encountered the problem that browsers estimate the bitrate very slowly. This became very obvious in the videos where there is no large keyframes, for example, in transmissions from CCTV cameras where there is no dynamic scenes and sudden changes of the background.

Due to specifics of video encoding, the actual bitrate might not always match the encoder’s bitrate settings. This depends on the encoded frame and on parameters of the encoder. For example, the transmitter could only send the data with the rate 100Kbps, while the estimated bitrate was 400 Kbps. This leads to decreasing of the estimated bitrate on the receiving side. The time that GCC and REMB mechanisms need to adjust the estimate upwards might take up to 10 minutes, and in certain cases it will never achieve the actual result.

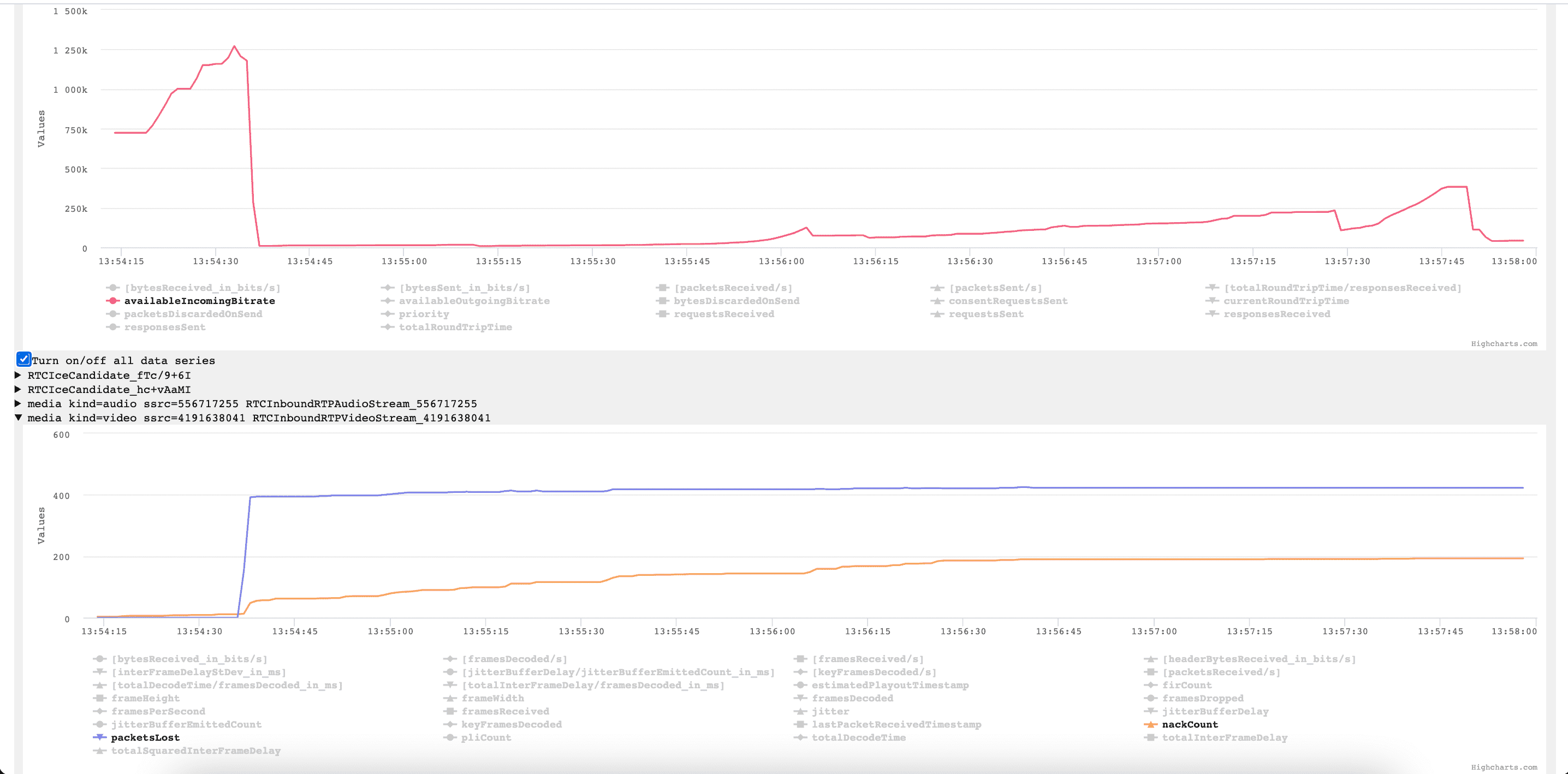

These kind of problems often happen when there is a momentary connecivity loss. Because of the sudden increase in the number of lost packets, the system quickly switches to the video track with a lower bitrate. This leads to a state when the actual channel bandwidth restored to its initial value, yet the REMB estimate would not allow the system to get back to the video track with a higher quality. This situation is shown on the diagram below. Here, the system experienced a short connectivity loss that led to increase in packetLoss and nackCount counters. The connection quickly restored, but the REMB value is increasing too slowly.

How does it work

There is a number of solutions that try to solve this problem, for example Chromium or jitsi-videobridge. These solutions use the same basic principle:

- Using TWCC mechanisms, the transmitter periodically sends to the receiving side a set of packets to test the estimated bitrate;

- The transmitter gets information on delay times for those packets;

- It calculates the actual bitrate these packets were sent and received with;

- The transmitter swithches to the videotrack with the higher bitrate if the actual measured bandwidth is high enough.

The difference between ABR WebRTC solutions is how they try to answering the following questions:

- When do you need to test the channel bandwidth?

- How do you select the bitrate for testing?

- What do you use as test packets?

- How do you analyze and apply the measured bitrate value?

From time to time Flussonic Media Server needs to test the available bandwidth against the target bitrate. This is needed to make a decision whether it is possible to switch up to the next video track. The system uses payload packets from the outgoing cue as test packets. The selected test packets have the same total size as the target bitrate.

The test packets are sent a few at a time, so that the whole test set is transmitted with the rate of the target bitrate. After we get information about the times of arrival of the test packets, we calculate the instant bitrate as well as an exponential moving average based on previous measurements. Then we increase the value of the target bitrate and repeat. As soon as the value of the target bitrate exceeds the bitrate of the next available higher quality video track by 10% the system switches to this track.

Our approach with using the payload packets has its pros and cons.

Pros:

- We don’t generate an excessive traffic, and only send the original payload packets.

Cons:

- There could be situations when the total size of the packets for a video stream with low bitrate is not enough to test against a high target bitrate.

- The packets are being sent to the receiving side with an uneven rate that might affect the jitter value.

We have planned the following steps to make our solution even better:

-

Using existing technologies we can use packets from the RTX list and/or ’empty’ padding packets as additional test payload. For this we will need to package video frames into RTP packets and perform SRTP encoding right before sending the packets to the receiving side. With this we will be able to add an additional packets without breaking RTP sequence.

-

It will be nice to send test packets only at the moments when the bandwidth is underutilized. For that we could use a so-called ALR detector that measures the size of the sent data and signals when the bandwidth is not used to its fullest.

-

Before starting playback we could measure the available bandwidth and select the video track that fits the best.

How we tested and benchmarked our solution

For tests we have used a video file with the following parameters:

# -ffprobe ./camera_mbr.mp4

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from './camera_mbr.mp4':

Duration: 00:04:59.96, start: 0.000000, bitrate: 4992 kb/s

Stream #0:0(eng): Audio: aac (LC) (mp4a), 48000 Hz, stereo, fltp, 96 kb/s

Stream #0:1(eng): Audio: aac (mp4a), 48000 Hz, stereo, fltp

Stream #0:2(eng): Video: h264 (avc1), 1280x720 [SAR 1:1 DAR 16:9], 2996 kb/s, 25 fps, 25 tbr, 90k tbn, 50 tbc

Stream #0:3(eng): Video: h264 (avc1), 1024x576 [SAR 1:1 DAR 16:9], 1196 kb/s, 25 fps, 25 tbr, 90k tbn, 50 tbc

Stream #0:4(eng): Video: h264 (avc1), 640x480 [SAR 1:1 DAR 4:3], 499 kb/s, 25 fps, 25 tbr, 90k tbn, 50 tbc

Stream #0:5(eng): Video: h264 (avc1), 320x240 [SAR 1:1 DAR 4:3], 198 kb/s, 25 fps, 25 tbr, 90k tbn, 50 tbc

We used various traffic shapers, e.g. ’tc’ shaper for Linux.

Test cases

We have used browser metrics available at chrome://webrtc-internals as well as Flussonic event logs. All tests were conducted in a local network using Flussonic Media Server v22.07.1.

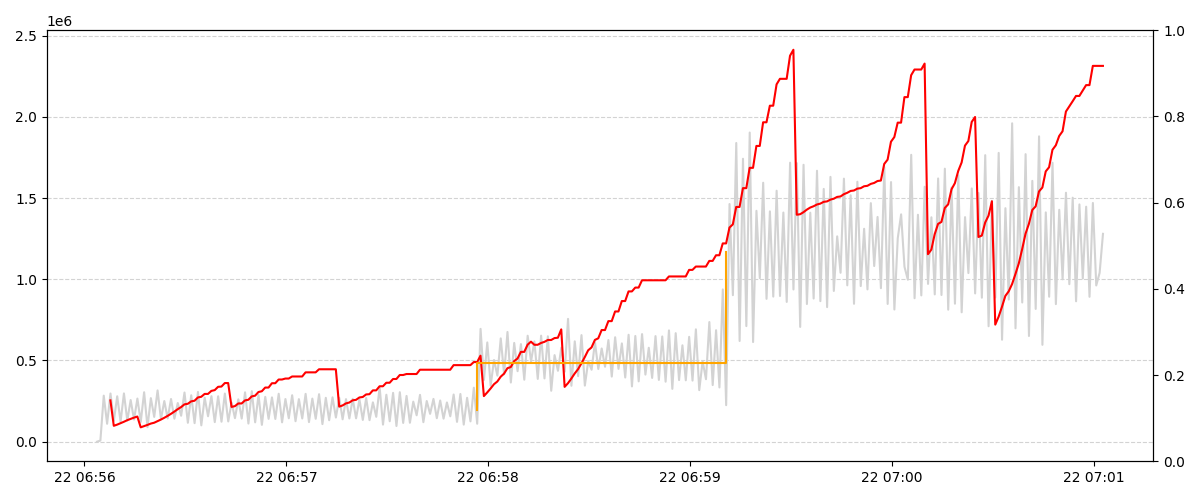

Test #1. Starting with the video track with lowest bitrate. Measuring time to getting to the highest video track.

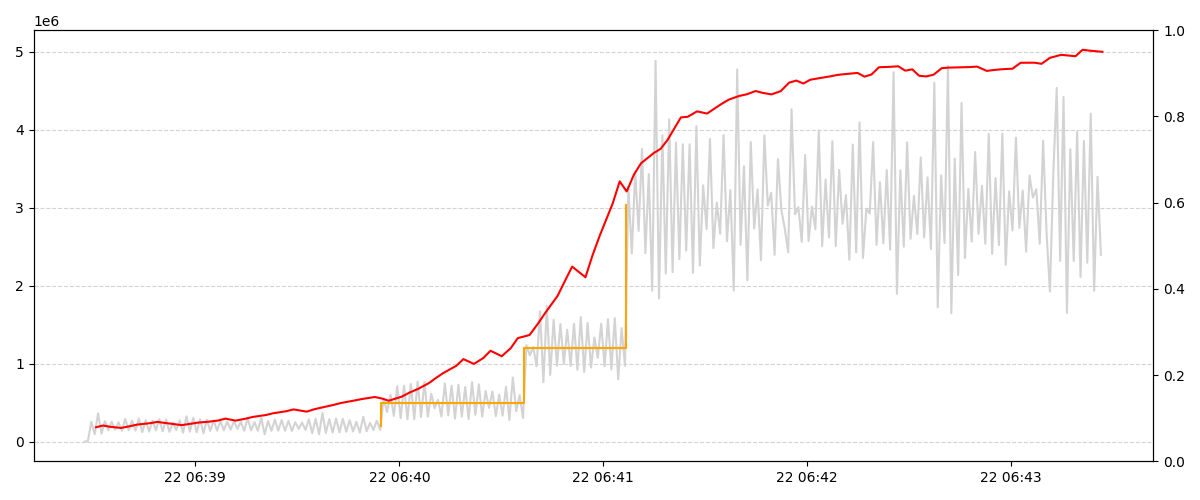

The left scale on the Y axis shows the bitrate in Mbps. The X axis shows hours and minutes.

On the chart below you can see that the REMB mechanism for estimating the bitrate has failed to increase the target bitrage within 5 minutes, and the system was unable to switch to the highest available video track. There were situations when the REMB mechanism could never get the target bitrate to the desired value.

The chart shows sudden drops and recoveries of the estimated bitrate value when using REMB. In the case with TWCC we see some waves. It seems that the internal GCC algorithm of the browser handles certain situation with a more stricter approach.

REMB Mechanism

Plot legend:

- Gray color —

bytesReceived_in_bits/s metric

- Orange color — marks when the system switches between video tracks

- Red color —

availableIncomingBitrate metric

TWCC Mechanism

Plot legend:

- Gray color —

bytesReceived_in_bits/s metric

- Orange color — marks when the system switches between video tracks

- Red color — the current estimate of the available bandwidth using internal Flussonic algorythms

Things to avoid when developing ABR WebRTC

While developing prototypes of the system, we have tried to analyze the delay of all outgoing packets using the same principle as it is implemented in Google Congestion Control mechanism. We tried to imitate the increase of the bitrate by adding some padding to the payload packets. We abandoned this approach because it didn’t have any visible benefits. However, this generated a lot of garbage traffic that wasted network resources by imitating increasing bitrate.

How to give it a try

At the moment, the TWCC (Transport Wide Congestion Control) mechanism implementation in Flussonic is still being tested and polished. By default, Flussonic Media Server uses REMB mechanism.

If you would like to try our new TWCC, you should add special options bitrate_prober and bitrate_probing_interval to the stream config. See https://flussonic.com/doc/video-playback/webrtc-playback/#twcc for more information.

Do not hesitate to write us, and send your questions and comment. Our email address is support@flussonic.com.